This is like cheating because the model is going to already perform the best since you're evaluating it based on data that it has already seen. Then you're using the fitted model to score the X_train sample. Your baseline model used X_train to fit the model. Test_dataset = IMDbDataset(test_encodings, test_labels) Val_dataset = IMDbDataset(val_encodings, val_labels) Train_dataset = IMDbDataset(train_encodings, train_labels)

# Initialize the weights of the network with Gaussian distribution Train_loader = DataLoader((X, L), batchsize=batch_size, shuffle=true) # Normalize input data to have zero mean and unit standard deviation Wid = weight tensor that generates an activation aᵢ Wd = Weight tensors of layer d that generates an activation

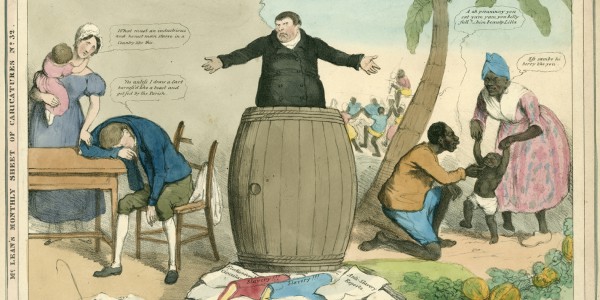

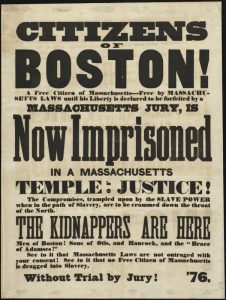

ANTI SLAVERY MANUSCRIPTS UPDATE

# convert the arguments passed during onnx.export callįunction RSO(X,L,C,model, batch_size, device)Ĭ = Number of rounds to update parameters Torch.rand(self.num_layers, self.batch_size, self.hidden_size).to(device).detach()) Return (torch.rand(self.num_layers, self.batch_size, self.hidden_size).to(device).detach(), Lstm_out, (hidden_a, hidden_b) = self.lstm(x, (h0, c0)) Specifically, a numpy equivalent for the following would be great: It would help us compare the numpy output to torch output for the same code, and give us some modular code/functions to use. I think it might be useful to include the numpy/scipy equivalent for both nn.LSTM and nn.linear.

ANTI SLAVERY MANUSCRIPTS HOW TO

So, the question is, how can I "translate" this RNN definition into a class that doesn't need pytorch, and how to use the state dict weights for it?Īlternatively, is there a "light" version of pytorch, that I can use just to run the model and yield a result? EDIT However, can I have some implementation for the nn.LSTM and nn.Linear using something not involving pytorch? Also, how will I use the weights from the state dict into the new class? I think I can easily implement the sigmoid function using numpy. I can work with numpy array instead of tensors, and reshape instead of view, and I don't need a device setting.īased on the class definition above, what I can see here is that I only need the following components from torch to get an output from the forward function: I am aware of this question, but I'm willing to go as low level as possible. Torch.rand(self.num_layers, self.batch_size, self.hidden_size).to(device)) Return (torch.rand(self.num_layers, self.batch_size, self.hidden_size).to(device), #return torch.rand(self.num_layers, self.batch_size, self.hidden_size) Lstm_out, self.hidden = self.lstm(cur_ft_tensor, self.hidden) Output_scores = torch.sigmoid(output_space) #we'll need to check if we need this sigmoidĬur_ft_tensor=feature_list#.view()Ĭur_ft_tensor=cur_ft_tensor.view() Output_space = self.hidden2out(lstm_out.view(len( feature_list), -1)) Lstm_out, _ = self.lstm( feature_list.view(len( feature_list), 1, -1))

Self.hidden2out = nn.Linear(hidden_size, output_size) Self.lstm = nn.LSTM(input_size, hidden_size,num_layers) Self.matching_in_out = matching_in_out #length of input vector matches the length of output vector Def _init_(self, input_size, hidden_size, output_size,num_layers, matching_in_out=False, batch_size=1):

0 kommentar(er)

0 kommentar(er)